CI/CD has been a lifesaver for organization with many projects, big or small. In my case, I have a Laravel project that needs its JavaScript to be built before deployment. There are a lot of options to do this, but this time, I think I found a perfect combination to deploy them with minimum clutter and be as cheap as possible with essentially any production and deployment infrastructure.

Let’s go through my development cycle first for quick overview. This project actually consists of three parts. One is Backend (called Portmafia), one is Back end’s front end (for admin panel), and my personal website. So Portmafia is made to host several contents for my personal website. Portmafia needs a pipeline, because the admin panel are made in react (since I want to use React Admin from MarmeLab). I don’t need fancy stuff like containerization, or isolation, or auto-scale etc. I just need the front end to be built, then have the back end’s dependencies properly installed, and then done.

With those requirements, here are several solutions we can consider:

- Build it into container and deploy them as is.

- Build it on CI/CD, and then commit the changes and push them to git, and in Production, run

git pull. - Build it on CI/CD, zip it, then push the zip to prod.

- Build it on CI/CD then

rsyncthem to prod.

So let’s talk about them one by one.

1. Build it Into Container and Deploy Them as Is.

Build the JS codes in the project, install the php dependencies, then package the projects into an image, usually with php docker image or its derivatives. To deploy the built project, the team can either use Kubernetes, Docker Swarm, or even the classic ‘docker compose pull && docker compose up -d‘.

Why It Works

Since packaging projects into docker is very popular for its versatility, this solution is actually viable solution for this project too. Since Docker actually host the official PHP Docker image, all what we need to do is just to configure the required extensions, install the dependencies, and build the Js files. Then the deployment can be done with various tools and tricks.

Why It Doesn’t Fit

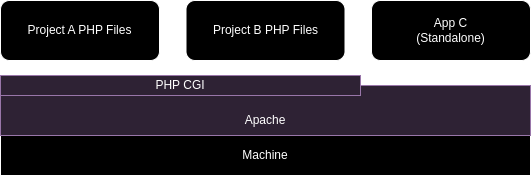

Running PHP projects usually requires either Apache with PHP CGI or PHP-FPM with Nginx (I’ll call it as ‘runners’ from now on). One instance of those Runners usually able to run several PHP projects, not single project as single process (see Fig. 1). This, however does not work in Docker.

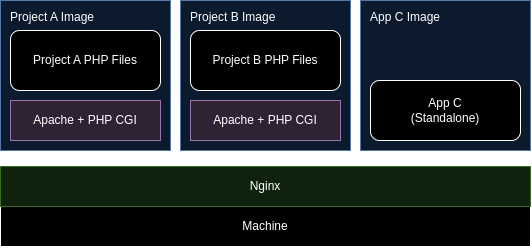

Since projects are packaged into single Docker images, we cannot share instances across Docker containers. This means for each project, you will need to start a new process, increasing the overhead, and memory footprint (see Fig. 2). If your app can be run with just PHP CLI, this option still viable for you, but considering PHP CLI only provides development server, and production-grade usually requires more sophisticated memory management, you will need to make sure if your runner and framework actually able to handle the request.

That being said, packaging PHP to Docker is somewhat make sense if your project requires weird plugins and configuration that cannot be shared to other project, or even security considerations. This solution also make sense if this is your only one of several PHP Projects across your company’s portfolio. Since dedicating a machine just to run PHP is kind of… absurd for low traffic apps, it makes more sense to just utilize your main infrastructure. If it can be treated as another container, then maintaining them also much more easier.

2. Build it on CI/CD, and Then Commit the Changes and Push Them to Git, and git pull in Production.

In this solution, you build the Js files in the CI/CD, then git commit them into a new branch only for built and artifact files. Things that are committed to that branch will be the one that gets deployed to server. After that, you can push the commit to your git hosting platform, then either trigger your Git-Auto-Deploy instance, or trigger git pull via ssh.

This obviously come with a lot of pros and cons. The pros for this solutions are:

- Trackable builds.

You have a whole history of older builds. If your team ever need to track regressions or bugs, this one can help. - Easy deployment.

You can justgit pullon your server, and done. - Easy managing the downstream authentication.

Most git hosting platform usually have deploy key or deploy token on their configuration panel. This means you can actually manage your server authentication on the git hosting dashboard, directly.

… then the cons:

- Complex CI/CD configuration.

You need to handle various git issues, i.e conflicts, rebase, squash, etc. - Complex builder authentication.

If your company requires certain certifications that needs the git to be signed, you have more homework to configuring your authentications 🙂 - Repository size.

Since you pushed the artifacts back to your git hosting, you will have bigger repo size. If you’re using cloud, then your concerns will be repo-size pricing. If you’re using on-premise git hosting platform, your concern mainly on how to homekeep them.

With that being said, let’s talk about why it works, and why it doesn’t exactly fit with my current requirements.

Why It Works

This solution properly keep the files that don’t need to be touched, updated, or deleted. They only update the files need to be updated, delete files that need to be deleted, and create new files that need to be created. Everything is neatly tracked and properly managed.

Why It Doesn’t Fit

With my current requirement this doesn’t exactly fit my requirements because it’s high on maintenance, and installation cost. (The cost here paid with sanity points xD)

First one, as mentioned in the pros and cons, it have complex CI/CD script, and complex security setup (auth, ssh, pgp, etc).

Second, I use mostly free tools… this mean I need to be conscious with project I make. Not because the size (GitLab generously offers us 10GB per repo for free), but homekeeping the branches is going to be pain in the neck since I’ll be automating the software maintenance.

… and finally, I need to configure the deployment triggers. Either SSH-ing then run git pull, or install the Git-Auto-Deploy project on your server. Not all hosting provider supports SSH, and not all hosting platform have git installed on their servers. So moving things will requires me extra time to research if the hosting platform supports it.

3. Build It on CI/CD, Zip It, Then Push The Zip to Prod

Similar with previous building steps, the only difference is, we don’t commit the changes back to the repo, but instead zipping them into single zip file, then push those to the machine. On the machine, you can either just replace all the required files, or extract to new folder, and update the web server configuration (extra script is needed).

Why It Works

This solution works with my project, because it has less overhead than the previous one. This means i don’t have thinker a lot with git configurations and its nittygritty. This solutions also updates the files as needed, though, maybe not that properly because it still leave unwanted files behind.

Why It DOESN’T Fit

As mentioned, this is not a perfect fit because it doesn’t delete the file. We can make a script to delete the files first, but man, I’m just lazy to manage the scripts.

Another solution is to create a soft-link for several folders (i.e uploads, cache, etc), but this will definitely need to create scripts too 😅.

In addition to that, this solution requires me to setup an agent on the production server, and i definitely need to setup the SSH access to (how do you even push the zip file to production anyway).

4. Build It on CI/CD Then rsync Them to Prod.

In this solution, you build the Js project as usual, but to deploy them, you don’t have to add extra step to package it, instead, you deploy them via rsync. rsync will automatically sync the current folder against the folder in the server. It will automatically update, copy, and delete files to match with one from the local folder. In addition to that, you can provide a list of files and folders to be ignored. This way, I can keep the cache, uploads, and logs folder in the production server.

Why it works

This solution works because it properly delete unused files, similar with the git solution, but this one is much cleaner because we don’t track the artifacts. That also means we don’t have to setup complex authentication configurations for the CI/CD to push the files back to git, and etc.

This solution also doesn’t need to have agent running on the production machine. Although rsync requires SSH access, we can find another equivalent replacement such as lftp. This means I can just make sure that the hosting platform supports either SSH or FTP access.

why It Fit

This solution perfectly fit my requirements because this solution doesn’t need specialized software on the production side. If anything, I just need to make sure the hosting platform I use supports SSH Access. To run migration automatically, I need SSH Access anyway, so SSH Access requirements is inevitable.

Expanding The rsync Solution For Everyone

After thinking a mitigation plan if I ever need to migrate the server for maintenance, this solution can actually be used for most hosting platform. PHP hosting provider normally use cPanel or Plesk panel in the back, and they usually known to support SSH and FTP Access. Though, availability usually depends on the provider themselves. Some only support SSH Access on higher plans, some even provide them for all plans. If they don’t allow SSH connections, then just go with lftp, or rclone.

Publisher

Since Publisher v1.1.0, I’ve added rsync to the image by default. You don’t have to install rsync on before_script section anymore.

You can check my current Portmafia CI/CD configurations if you want to check how it being used. You can also check the Publisher repo if you want to learn more.